In previous articles, I described an approach to deploying a FastAPI service to ECS. However, it doesn’t include any information on how to provision a database as storage for the api service. In this post, I will describe an approach I took to provision an RDS Aurora cluster as data storage for an ECS service running as a container in a container instance. In addition, we will create an RDS proxy to communicate between the api service and the RDS cluster.

Firstly, we need to extend the VPC to include database subnets. This would allow us to deploy the RDS Aurora cluster into the database subnet. Note that the RDS cluster is set to private and can only be accessed from within the VPC. We create a single cluster with one reader instance:

resource "aws_rds_cluster" "postgresql" {

cluster_identifier = var.rds_cluster_name

engine = "aurora-postgresql"

engine_version = "15.4"

database_name = "postgres"

master_username = "postgres"

manage_master_user_password = true

db_cluster_instance_class = null

db_subnet_group_name = var.database_subnet_group_name

port = 5432

skip_final_snapshot = true

storage_encrypted = true

vpc_security_group_ids = [aws_security_group.dbtest_sg.id]

}

resource "aws_rds_cluster_instance" "cluster_instances" {

count = 1

identifier_prefix = "dbtestinstance"

cluster_identifier = aws_rds_cluster.postgresql.id

instance_class = "db.t3.medium"

engine = "aurora-postgresql"

engine_version = "15.4"

db_subnet_group_name = var.database_subnet_group_name

depends_on = [aws_rds_cluster.postgresql]

}We need to allow traffic to flow between the ECS Service and the proxy and between the proxy and the database. We create a security group where we allow traffic from resources that belong to the container instances security group and resources that have the ECS service security group. The self-reference security group self=true is to allow traffic from the proxy to the database via 5432.

resource "aws_security_group" "dbtest_sg" {

name = "DBTestSG"

description = "Allow TLS inbound traffic for DB"

vpc_id = var.vpc_id

ingress {

from_port = 5432

to_port = 5432

protocol = "tcp"

security_groups = [var.ecs_container_instance_sg]

description = "Inbound from ECS Container Instance"

}

ingress {

from_port = 5432

to_port = 5432

protocol = "tcp"

security_groups = [var.ecs_service_sg]

description = "Inbound from ECS Service"

}

ingress {

from_port = 5432

to_port = 5432

protocol = "tcp"

self = true

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "dbservice"

}

}By setting storage_encrypted = true, I have enabled automatic password encryption in the RDS cluster. RDS will automatically create a secrets manager secret with the username and password of the cluster encrypted using the default aws/secretsmanager key. We need to create an IAM role to allow the proxy to be able to decrypt and read the database credentials via secrets manager. Below is an example of a policy that can be attached to the role:

data "aws_iam_policy_document" "secrets_manager_permissions" {

statement {

sid = "GetSecretValue"

effect = "Allow"

actions = [

"secretsManager:GetSecretValue"

]

resources = ["${aws_rds_cluster.postgresql.master_user_secret[0].secret_arn}"]

}

statement {

sid = "GetAppSecretValue"

effect = "Allow"

actions = [

"secretsManager:GetSecretValue"

]

resources = ["${aws_secretsmanager_secret.application.arn}"]

}

statement {

sid = "DecryptSecretValue"

effect = "Allow"

actions = [

"kms:Decrypt"

]

resources = ["arn:aws:kms:eu-west-1:XXXXX:key/c5fced17-0917-461f-bc50-9155765d999d"]

condition {

test = "StringEquals"

variable = "kms:ViaService"

values = ["secretsmanager.eu-west-1.amazonaws.com"]

}

}

}Note that for above, we are allowing both the RDS created credentials and a set of custom application credentials to be read by the proxy.

Next, I create a terraform_data resource that runs a local python script which creates the initial application database after the cluster instance is created. The script uses boto3 to invoke a shell script via SSM RunCommand on any available container instances:

resource "terraform_data" "setup_database" {

# Create initial db for api

# Calls SSM runscript to setup initial database

provisioner "local-exec" {

command = "python ${path.module}/setup_database.py --region ${var.region} --s3-script-path '${local.s3_script_url}' --db-secretid '${aws_rds_cluster.postgresql.master_user_secret[0].secret_arn}' --dbname '${var.database_application_name}' --dbuser '${var.database_application_user}' --dbpassword '${var.database_application_password}' --host '${aws_rds_cluster.postgresql.endpoint}'"

}

depends_on = [aws_rds_cluster_instance.cluster_instances]

}The above runs a local python script that creates the initial database the application connects to. The script is stored in an S3 bucket and is invoked via Systems Manager RunCommand, passing it the instance id of an container instance. The script installs psql client and creates the initial database based on user supplied username and password:

#!/bin/bash

set -e

echo "Installing psql"

sudo yum install postgresql15 -y

echo "Creating user database..."

PGPASSWORD=$POSTGRES_PASSWORD psql sslmode=require -h $POSTGRES_HOST -U $POSTGRES_USER <<-EOSQL

CREATE DATABASE $DB_NAME;

CREATE ROLE lab_tech;

GRANT CONNECT ON DATABASE $DB_NAME TO lab_tech;

GRANT ALL PRIVILEGES ON DATABASE $DB_NAME TO lab_tech;

CREATE ROLE $DB_USER LOGIN PASSWORD '$DB_PASSWORD';

GRANT lab_tech TO $DB_USER;

EOSQL

echo "Creating user schema..."

PGPASSWORD=$DB_PASSWORD psql sslmode=require -h $POSTGRES_HOST -U $DB_USER -d $DB_NAME <<-EOSQL

CREATE SCHEMA $DB_USER;

EOSQLThe above script logs into the default postgres DB as the postgres user. It creates the application database and attaches a role for a newly created user. This user will be used in the application database credentials to connect to the database.

According to SQLAlchemy Remote Schema Table introspection, postgresql will use the public schema to create tables. This will fail in postgresql 15 and above. After creating the database user, we login to the database as said user and create a schema based on the username. When the user authenticates to the specific database via the application, it will use this schema by default, overriding the public access denied error.

Creating the proxy

There are some caveats to creating and using RDS proxy:

-

RDS proxy needs a reader endpoint to work hence I have to create a minimal Aurora cluster with at least 1 reader as above.

-

If the RDS DB is encrypted, we also need to enable encryption on the RDS proxy or it will fail with errors such as

Proxy authentication with PostgreSQL native password authentication failed for user "postgres" with TLS off. Reason: TLS is required by the current configuration. If you're using the PostgreSQL CLI, use the flag sslmode=require in 8.4 or newer versions.

There have been articles online on the type of certificate to use for encryption which could also trigger an error but I have no issue with using the default latest certifcates.

-

Enable the cloudwatch logging option when creating the proxy as it enables easier debugging via Cloudwatch Logs for every proxy connection.

-

We also need to enable additional options to the database connection object for the api application using SQLAlchemy, which will be detailed below.

The following terraform resources create the RDS proxy:

resource "aws_iam_role" "this" {

name_prefix = "RDSProxyRole"

assume_role_policy = data.aws_iam_policy_document.assume_role_policy.json

}

data "aws_iam_policy_document" "assume_role_policy" {

statement {

actions = ["sts:AssumeRole"]

effect = "Allow"

principals {

type = "Service"

identifiers = ["rds.amazonaws.com"]

}

}

}

resource "aws_iam_role_policy" "this" {

role = aws_iam_role.this.id

policy = data.aws_iam_policy_document.secrets_manager_permissions.json

}

data "aws_iam_policy_document" "secrets_manager_permissions" {

statement {

sid = "GetSecretValue"

effect = "Allow"

actions = [

"secretsManager:GetSecretValue"

]

resources = ["${aws_rds_cluster.postgresql.master_user_secret[0].secret_arn}"]

}

statement {

sid = "GetAppSecretValue"

effect = "Allow"

actions = [

"secretsManager:GetSecretValue"

]

resources = ["${aws_secretsmanager_secret.application.arn}"]

}

statement {

sid = "DecryptSecretValue"

effect = "Allow"

actions = [

"kms:Decrypt"

]

resources = ["arn:aws:kms:eu-west-1:XXXXX:key/c5fced17-0917-461f-bc50-9155765d999d"]

condition {

test = "StringEquals"

variable = "kms:ViaService"

values = ["secretsmanager.eu-west-1.amazonaws.com"]

}

}

}

resource "aws_db_proxy" "example" {

name = "dbtestproxy"

debug_logging = true

engine_family = "POSTGRESQL"

idle_client_timeout = 1800

require_tls = true

role_arn = aws_iam_role.this.arn

vpc_security_group_ids = [aws_security_group.dbtest_sg.id]

vpc_subnet_ids = var.database_subnets

auth {

auth_scheme = "SECRETS"

description = "EXAMPLE"

iam_auth = "DISABLED"

secret_arn = aws_rds_cluster.postgresql.master_user_secret[0].secret_arn

}

auth {

auth_scheme = "SECRETS"

description = "APPLICATION DB CREDS"

iam_auth = "DISABLED"

secret_arn = aws_secretsmanager_secret.application.arn

}

tags = {

Name = "example"

Key = "value"

}

depends_on = [aws_rds_cluster.postgresql]

}

resource "aws_db_proxy_default_target_group" "example" {

db_proxy_name = aws_db_proxy.example.name

connection_pool_config {

connection_borrow_timeout = 120

max_connections_percent = 100

max_idle_connections_percent = 50

}

depends_on = [aws_rds_cluster.postgresql]

}Next we create the target group to link the proxy to the RDS cluster:

resource "aws_db_proxy_target" "example" {

db_cluster_identifier = aws_rds_cluster.postgresql.cluster_identifier

db_proxy_name = aws_db_proxy.example.name

target_group_name = aws_db_proxy_default_target_group.example.name

depends_on = [aws_rds_cluster.postgresql]

}We also need to create a separate secrets in secrets manager based on the application database credentials:

locals {

data = jsondecode(data.aws_secretsmanager_secret_version.master_user_secret.secret_string)

new_secret = {

username = var.database_application_user

password = var.database_application_password

host = aws_db_proxy.example.endpoint

port = aws_rds_cluster.postgresql.port

}

}

resource "aws_secretsmanager_secret" "application" {

name = var.rds_secret_name

}

resource "aws_secretsmanager_secret_version" "application" {

secret_id = aws_secretsmanager_secret.application.id

secret_string = jsonencode(local.new_secret)

}We need to update the task definition and the task execution role to read the secret above. Within the task defintion, we can define the secrets as environment variables as long as we allow the task execution role permission to decrypt the secret:

{

"containerDefinitions": [

{

"name": "fastapi",

"image": "035663780217.dkr.ecr.eu-west-1.amazonaws.com/fastapi-dev",

"secrets": [

{

"name": "DB_USER",

"valueFrom": "arn:aws:secretsmanager:eu-west-1:XXXX:secret:application:username::"

},

{

"name": "DB_PASSWORD",

"valueFrom": "arn:aws:secretsmanager:eu-west-1:XXXX:secret:application:password::"

},

{

"name": "DB_HOST",

"valueFrom": "arn:aws:secretsmanager:eu-west-1:XXXX:secret:application:host::"

},

{

"name": "DB_PORT",

"valueFrom": "arn:aws:secretsmanager:eu-west-1:XXXX:secret:application:port::"

}

],

...

}

]

}The task execution role would need a policy that allows the service to access the secret:

data "aws_kms_alias" "secrets_manager" {

name = "alias/aws/secretsmanager"

}

data "aws_iam_policy_document" "application_secret" {

statement {

effect = "Allow"

actions = [

"secretsmanager:GetSecretValue",

"kms:Decrypt"

]

resources = [

var.rds_secret_name,

data.aws_kms_alias.secrets_manager.arn

]

}

}The above grants KMS permission to decrypt the default aws/secretsmanager key to get the secret created for the application.

Next, I created a terraform_data resource that generates an updated task definition that includes the above secrets block and the task execution ARN and registers it as a new version. While this may not be the best approach, the provisioner is run only on create. Subsequent changes to the task definition template would be made manually.

From previous posts, we segreated the task definition away from the TF state so we could modify it on the fly as well as to allow the workflow to update the image in the task definition template based on the commit SHA.

An example of the terraform_data resource:

resource "terraform_data" "create_taskdef_script" {

# Create task definition from template with secrets ARN from secrets manager

provisioner "local-exec" {

command = "python ${path.module}/create_taskdef.py --db-secretid '${var.rds_secret_name}' --region '${var.region}' --execution-role-arn '${aws_iam_role.task_execution_role.arn}'"

}

}The above script runs locally and it updates the present taskdef.json template file to include the new secrets and task execution role.

Lastly, the changes need to also be made to the api application.

To allow the api service, in this case Fastapi, to communicate with the proxy, we need the following settings for SqlAlchemy:

SQLALCHEMY_DATABASE_URL = f"postgresql+psycopg2://{os.getenv('DB_USER')}:{os.getenv('DB_PASSWORD')}@{os.getenv('DB_HOST')}:{os.getenv('DB_PORT')}/{os.getenv('DB_NAME')}?sslmode=require"

engine = create_engine(

SQLALCHEMY_DATABASE_URL,

echo=True,

pool_size=10,

max_overflow=2,

pool_recycle=3600,

pool_pre_ping=True,

pool_use_lifo=True

)The environment variables are passed from a secrets manager secret which contain the RDS database username and password, as detailed in the new task definition above. On successful deploy, the credentials will be retrieved from secrets manager passed to the running service as environment variables.

Note the use of pool_size in the configuration options. These are necessary in order to prevent the application from opening too many connections to the database. However, this should not be necessary for connecting to the proxy but without it, I was unable to get SQLAlchemy to work properly with RDS proxy.

Once this is setup, we will have a matching environment both locally and in a dev/staging environment. The local setup will involve using docker compose with both the service and postgresql database as containers.

On deploy, the application container will attempt to connect to the database via the proxy.

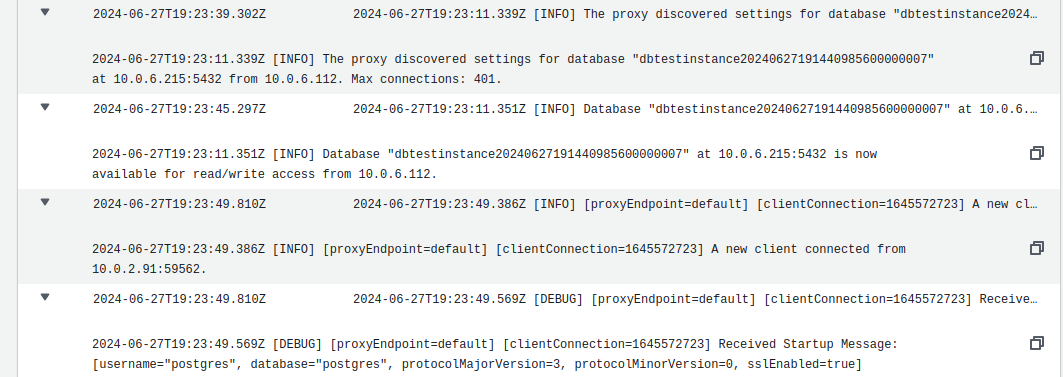

We can inspect the RDS Proxy cloudwatch logs to see the attempts made. Below is a screenshot to show each connection made to the proxy in cloudwatch logs:

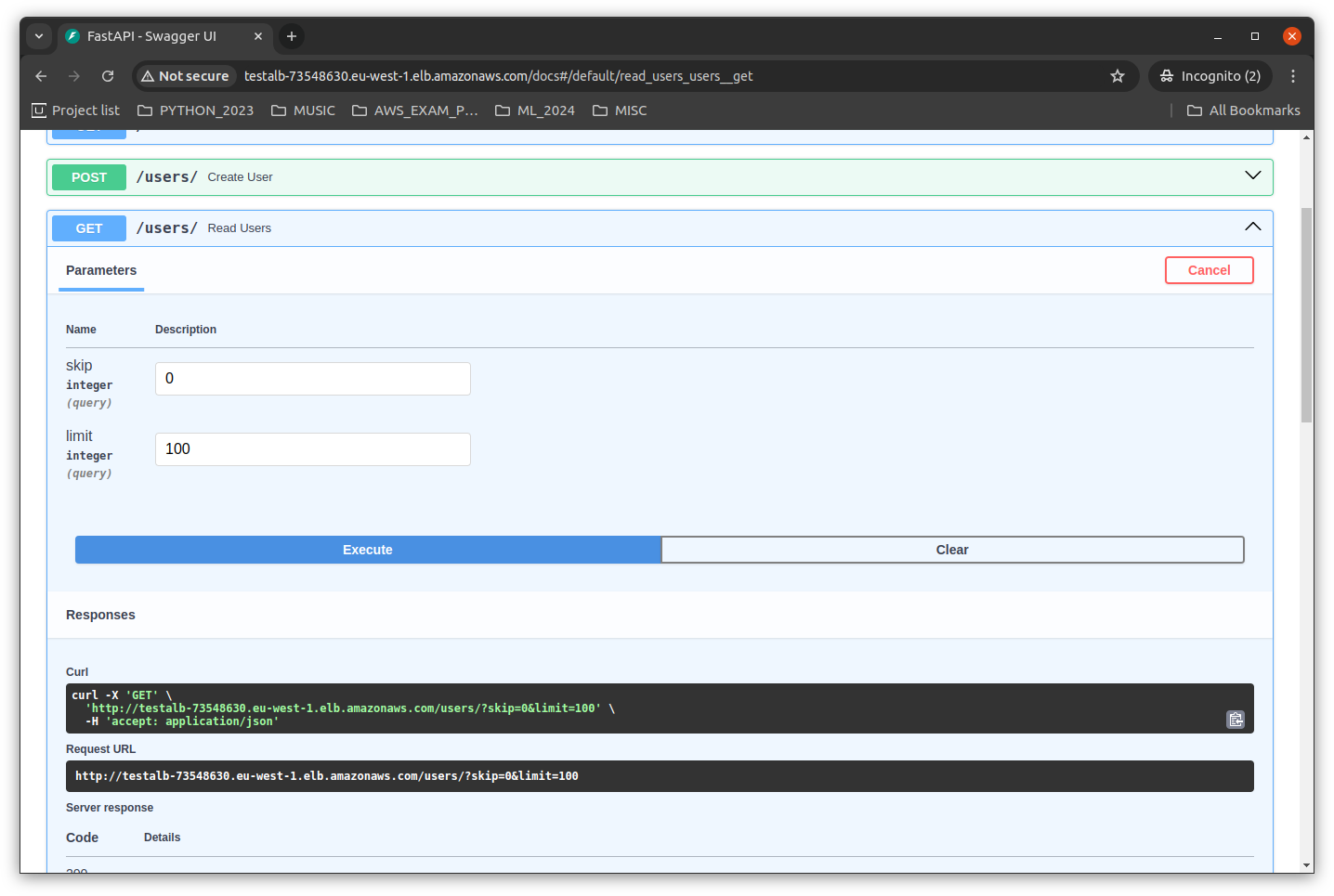

The screenshot below shows the FastAPI swagger documentation endpoint accessing the database via the users endpoint:

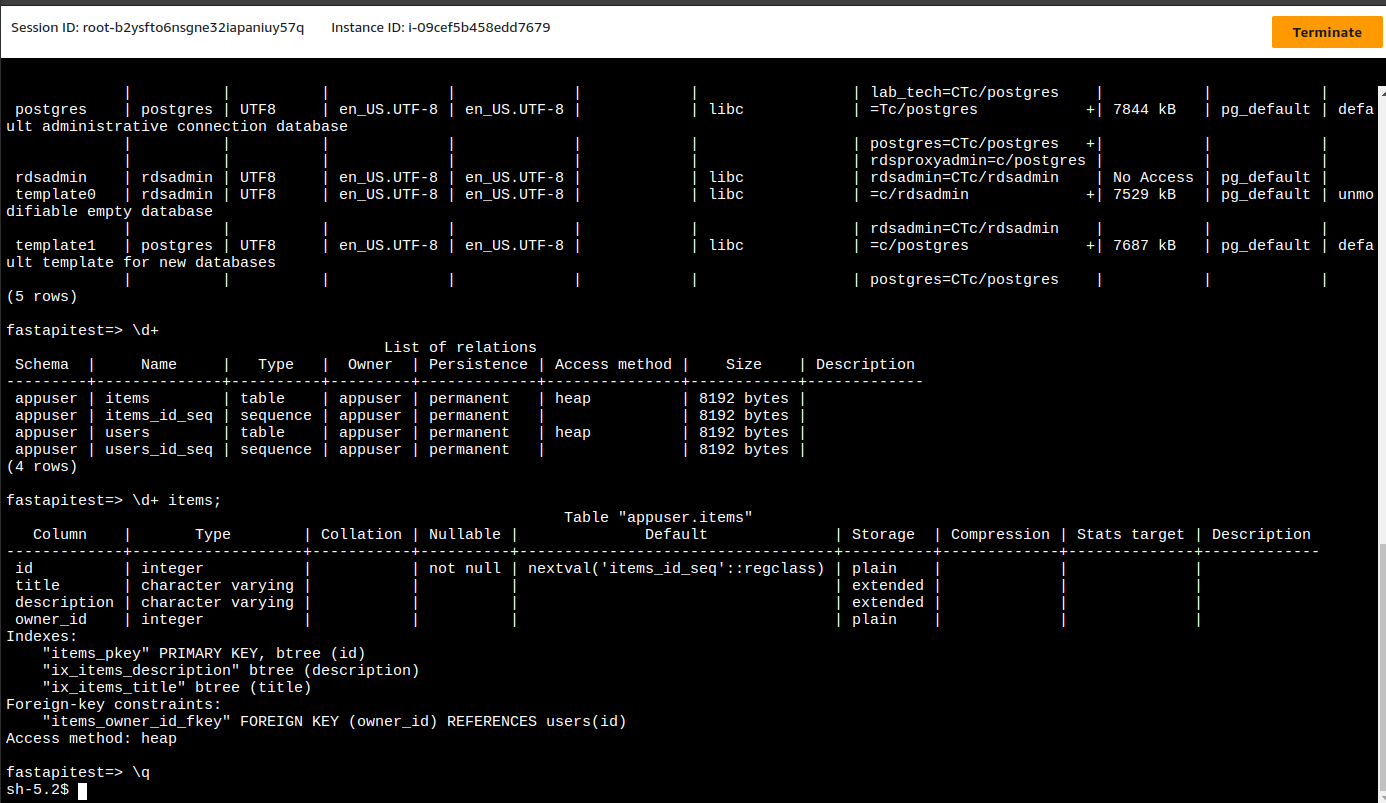

Since we added the container instance security group as an ingress to the proxy security group, we can invoke psql via systems manager on a container instance to examine the database: